Google is building an AI model to talk to dolphins

Google has recently unveiled DolphinGemma, an artificial intelligence model designed to analyse and mimic dolphin vocalisations. Developed in collaboration with the Wild Dolphin Project (WDP) and Georgia Tech, the AI processes decades of recorded dolphin sounds to identify patterns and predict communication sequences—much like how AI models anticipate text in human language, says an official blog post by Google.

According to Google, the Wild Dolphin Project has been studying wild Atlantic spotted dolphins in the Bahamas since 1985, compiling an extensive dataset of vocalisations linked to specific behaviours. These include signature whistles, which function like names, and more aggressive sounds such as squawks.

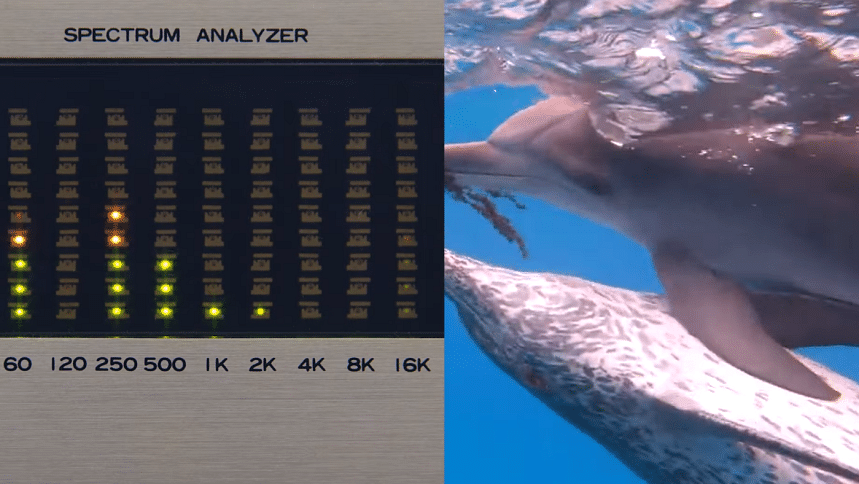

DolphinGemma leverages Google's SoundStream audio technology to break down these sounds into analysable segments. Despite its sophistication, the model is relatively lightweight at 400 million parameters and is capable of running on Pixel phones, which allows researchers to process data in real-time while in the field.

To facilitate basic two-way interaction, researchers pair the AI with the 'CHAT' system, an underwater computer that links synthetic whistles with objects like seaweed or toys. Dolphins exposed to this system may learn to mimic these sounds to request specific items, effectively creating a rudimentary shared vocabulary between humans and dolphins.

Currently, the WDP team is using DolphinGemma to investigate hidden structures within dolphin communication, aiming to uncover linguistic-like rules. Google plans to release the model to the public later this summer, enabling researchers studying other dolphin species to adapt and apply it in their own work. Google also says that the next phase of development will incorporate the upcoming Pixel 9 phones to enhance the speed and efficiency of sound analysis and response, which could improve real-time interaction with dolphins.

While the technology is promising, it does not yet provide a direct translation of the 'dolphin language'. Instead, it identifies patterns and supports structured communication experiments. The long-term aim is to close the gap in understanding dolphin communication, rather than fully decode their natural speech. Whether it eventually leads to meaningful interspecies dialogue remains uncertain, but it represents an exciting new direction in the study of marine intelligence.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments